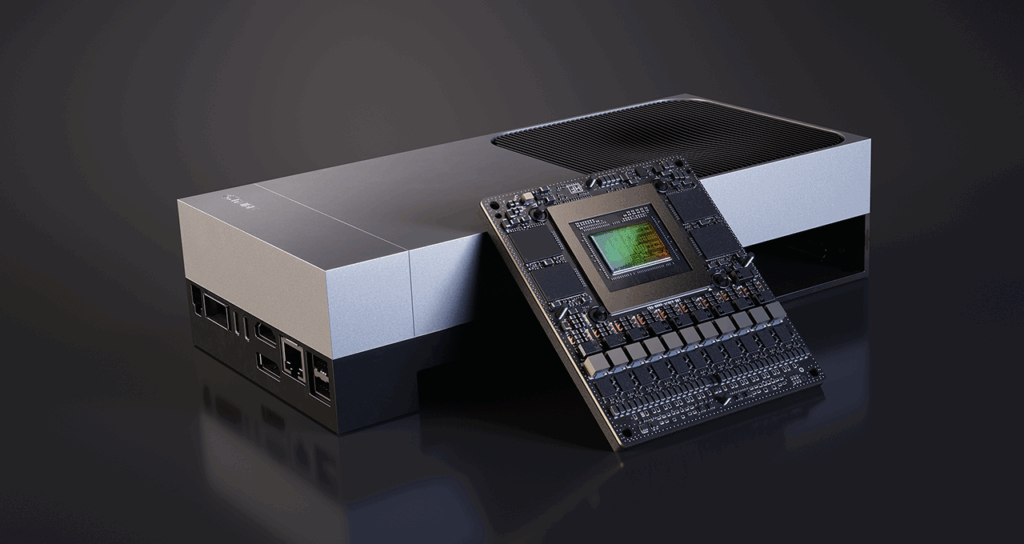

Last month Nvidia launched it’s powerful new AI and robotics developer kit Nvidia Jetson AGX Thor. The chipmaker says it delivers supercomputer-level AI performance in a compact, power-efficient module that enables robots and machines to run advanced “physical AI” tasks—like perception, decision-making, and control—in real time, directly on the device without relying on the cloud.

It’s powered by the full-stack Nvidia Jetson software platform, which supports any popular AI framework and generative AI model. It is also fully compatible with Nvidia’s software stack from cloud to edge, including Nvidia Isaac for robotics simulation and development, Nvidia Metropolis for vision AI and Holoscan for real-time sensor processing.

Nvidia says it’s a big deal because it solves one of the most significant challenges in robotics: running multi-AI workflows to enable robots to have real-time, intelligent interactions with people and the physical world. Jetson Thor unlocks real-time inference, critical for highly performant physical AI applications spanning humanoid robotics, agriculture and surgical assistance.

Jetson AGX Thor delivers up to 2,070 FP4 TFLOPS of AI compute, includes 128 GB memory, and runs within a 40–130 W power envelope. Built on the Blackwell GPU architecture, the Jetson Thor incorporates 2,560 CUDA cores and 96 fifth-gen Tensor Cores, enabled with technologies like Multi-Instance GPU. The system includes a 14-core Arm Neoverse-V3AE CPU (1 MB L2 cache per core, 16 MB shared L3 cache), paired with 128 GB LPDDR5X memory offering ~273 GB/s bandwidth.

There’s a lot of hype around this particular piece of kit, but Jetson Thor isn’t the only game in town. Other players like Intel’s Habana Gaudi, Qualcomm RB5 platform, or AMD/Xilinx adaptive SoCs also target edge AI, robotics, and autonomous systems.

Here’s a comparison of what’s available currently and where it shines:

Edge AI robotics platform shootout

Nvidia Jetson AGX Thor

Specs & Strengths: Built on Nvidia Blackwell GPU, delivers up to 2,070 FP4 TFLOPS and includes 128 GB LPDDR5X memory—all within a 130 W envelope. That’s a 7.5 times AI compute leap and 3 times better efficiency compared to the previous Jetson Orin line. Equipped with 2,560 CUDA cores, 96 Tensor cores, and a 14-core Arm Neoverse CPU. Features 1 TB onboard NVMe, robust I/O including 100 GbE, and optimized for real-time robotics workloads with support for LLMs and generative physical AI.

Use Cases & Reception: Early pilots and evaluations are taking place at several companies, including Amazon Robotics, Boston Dynamics, Meta, Caterpillar, with pilots from John Deere and OpenAI.

Qualcomm Robotics RB5 Platform

Specs & Strengths: Powered by the QRB5165 SoC, combines Octa-core Kryo 585 CPU, Adreno 650 GPU, Hexagon Tensor Accelerator delivering 15 TOPS, along with multiple DSPs and an advanced Spectra 480 ISP capable of handling up to seven concurrent cameras and 8K video. Connectivity is a standout—integrated 5G, Wi-Fi 6, and Bluetooth 5.1 for remote, low-latency operations. Built for security with Secure Processing Unit, cryptographic support, secure boot, and FIPS certification.

Use Cases & Development Support: Ideal for robotics use cases like SLAM, autonomy, and AI inferencing in robotics and drones. Supports Linux, Ubuntu, and ROS 2.0 with rich SDKs for vision, AI, and robotics development.

(Read more about the Qualcom Robotics RB5 platform on Robot Report)

AMD Adaptive SoCs and FPGA Accelerators

Key Capabilities: AMD’s AI Engine ML (AIE-ML) architecture provides significantly higher TOPS per watt by optimizing for INT8 and bfloat16 workloads.

Innovation Highlight: Academic projects like EdgeLLM showcase CPU–FPGA architectures (using AMD/Xilinx VCU128) outperforming GPUs in LLM tasks—achieving 1.7 times higher throughput and 7.4 times better energy efficiency than NVIDIA’s A100.

Drawbacks: Powerful but requires specialized development and lacks an integrated robotics platform and ecosystem.

The Intel Habana Gaudi is more common in data centers for training and is less prevalent in embedded robotics due to form factor limitations.