Full-stack integration of compute, cooling, and power provides global clients with disruptive deployment speed, cost-effectiveness, and energy efficiency.

Super X AI Technology Limited unveiled its data center-scale solution, the SuperX Modular AI Factory. This solution is engineered to overcome the core challenges of traditional AI data center construction—long lead times, high costs, massive energy consumption, and limited scalability—offering a disruptive and innovative approach for the AI era.

The SuperX Modular AI Factory: a full-stack solution

As global enterprises race to deploy large-scale Large Language Models (LLMs) and AI applications, the demand for AI infrastructure is growing exponentially. However, the typical 18-to-24-month construction cycle for traditional data centers has become a critical bottleneck. The SuperX Modular AI Factory addresses this by pre-fabricating and deeply integrating compute, cooling, and power systems. By minimizing on-site construction, this approach is expected to reduce delivery and deployment time to under six months, enabling clients to seize market opportunities with unprecedented speed.

Core advantages at a glance:

| Feature | Key metrics | Value proposition |

| Ultra-high-density compute | Up to 20MW per module; configured with 6 SuperX NeuroBlock core compute units (supporting up to 144 NVIDIA GB200 NVL72 systems[1] in total) | Capable of handling next-generation AI workloads, achieving extreme compute density. |

| Hyper-flexible scalability | Modular architecture supporting 1-to-N elastic deployment | Expand on-demand and scale seamlessly as business grows. |

| All-in-one full stack | Integrated “Compute + Cooling + Power” | Delivers high-performance AI servers, high-density liquid cooling, and HVDC power systems, avoiding fragmented integration and enhancing system efficiency and reliability. |

| High reliability | HVDC design eliminates the need for UPS, enhancing safety | Supports granular management to proactively prevent overload and overheating risks; batteries can be located away from the core data hall, ensuring data center security. |

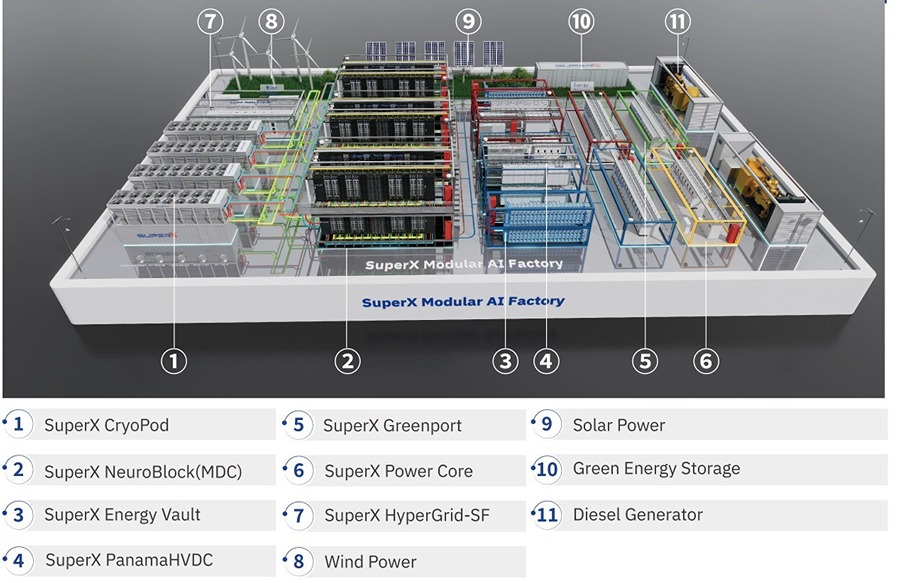

The SuperX Modular AI Factory is a full-stack ecosystem comprising multiple prefabricated core components, including:

- SuperX NeuroBlock: A single core compute unit that supports up to 24 NVIDIA GB200 NVL72 systems with a power capacity of up to 3.5MW.

- SuperX CryoPod: A dual-source cooling system with options for dry coolers or chillers, offering low-consumption, water-free operation.

- SuperX Energy Vault & Green Energy Storage : An energy storage system designed to extend the utilization of green power.

- SuperX Greenport & HyperGrid: Prefabricated HVDC power distribution for rapid deployment and high efficiency.

- SuperX Power Core: Factory-prefabricated and ready for on-site installation, reducing footprint and supporting biodiesel to lower carbon emissions.

The innovation of the SuperX Modular AI Factory lies in its high-level integration of compute, cooling, and power, transforming “complex custom project” into a “standardized product” that is plug-and-play ready. A 20MW module is estimated to require a physical footprint of only 6,000 m², with the ability to expand infinitely using a “building-block” approach.

Redefining AI infrastructure: the four core values

SuperX’s solution is not merely a product update but a systematic reconstruction of AI infrastructure standards in four dimensions:

- Hyper-Speed Delivery: All core modules—compute, cooling, and power—are prefabricated, integrated, and tested in the factory. On-site assembly is a rapid and systematic process, expected to cut delivery and deployment time to under six months, making AI compute nearly “plug-and-play.”

- Hyper-Density: The core compute unit, SuperX NeuroBlock, supports up to 24 NVIDIA GB200 NVL72 systems with a power capacity of up to 3.5MW, achieving a density seven times that of traditional solutions[2]. Deeply coupled with high-density liquid cooling and HVDC power, it achieves high compute density in a significantly smaller footprint.

- Hyper-Efficiency: The solution fully adopts High-Voltage Direct Current (HVDC) technology, boosting end-to-end power efficiency to over 98.5%. Combined with advanced liquid cooling, this drives the overall Power Usage Effectiveness (PUE) to as low as 1.15, delivering over 23% in total energy savings compared to traditional air-cooled systems (PUE ~1.5[3]).

- Hyper-Flexibility: Modularity is the core of the solution. Clients can start with a single module and scale seamlessly from 1-to-N as needed. This significantly reduces initial capital expenditure (CAPEX) and perfectly aligns with the rapid, iterative nature of AI business models.

SuperX’s strategic upgrade to a “standard-setter”

The most critical factors in deploying AI compute are speed and long-term energy savings. Traditional data center construction models face challenges keeping pace with the demands of the AI era. SuperX Modular AI Factory is designed to address this bottleneck, enabling faster deployment and more energy-efficient operations, which we believe can enhance our clients’ return on investment and significantly reduce market risks. The launch of the SuperX Modular AI Factory signals our transformation of AI infrastructure from an ‘engineering project’ into a ‘standardized product’.

This launch marks a strategic upgrade for SuperX, evolving from an AI infrastructure integrator to a solution provider that is setting the standards for the next generation of AI factories and driving the industry-wide transition from traditional data centers.

[1] For specifications of the NVIDIA GB200 NVL72, please refer to the official NVIDIA website: https://www.nvidia.com/en-us/data-center/gb300-nvl72/

[2] The rack power density for traditional data centers is around 20kW per rack. SuperX’s NeuroBlock’s solution is designed to be around 140kW per rack, which is 7 times higher comparatively.

[3] According to the Uptime Institute, the average PUE value was 1.57 in 2021

For more information, visit superx.sg.